Using Rollup to merge old logs with Elasticsearch 6.3

Log storage and analysis is one of the most popular usage of Elasticsearch today1, it’s easy and it scales. But quickly enough, adding nodes cost too much money and storing logs from multiple months or years can be tricky.

A solution I often see when doing Elasticsearch Consulting (shameless plug) is to keep only detailed logs for the most consulted time range (the last 60 days i.e.), and to merge older logs in a new index, by day or by month, instead of having one log per event. This allows us to have a huge retention period without the pain of dealing with large indexes and enormous bills from Amazon 💸.

This merging is usually done by hand, with the help of the Bulk, the Scan, Scroll and the Delete By Query APIs… But this time is gone, as Elasticsearch 6.3 now ships with a Rolling Up functionality I’m going to cover in this article.

⚠️ This functionality is part of X-Pack Basic and is marked as experimental.

Gif from https://twitter.com/elastic/status/1007281219988582400.

Section intitulée summarizing-historical-dataSummarizing historical data

Section intitulée getting-some-data-inGetting some data in

I will use this awesome python tool to quickly generate one million fake logs (urls.txt is just a list of real urls):

python es_test_data.py --es_url=http://localhost:9200 --count=1000000 --dict_file=urls.txt --index_name=access_logs --format=url:dict:1:1,bytes:int:1337:9999,datetime:tstxtIt will generate documents like this:

{

"_index": "access_logs",

"_type": "test_type",

"_id": "_b0fb2QBzU1iuAe3M6NW",

"_score": 1,

"_source": {

"url": "/blog/typography-the-right-way-with-jolitypo",

"bytes": 5319,

"datetime": "2018-07-26T19:52:02.000-0000"

}

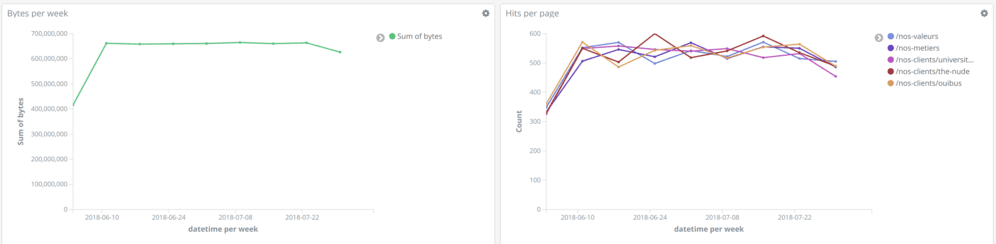

}I use them to graph hits per pages and downloaded bytes in time:

So right now my index access_logs is full of logs of the last 30 days, but I only need fine granularity over the last 10 days. Let’s create the Rolling Up Job to merge older than 10 days logs into groups of one hour.

Section intitulée building-a-rollup-jobBuilding a Rollup Job

This query will create a job called access_logs, merging documents by url and grouping them by hour, only for documents older than 10 days:

PUT _xpack/rollup/job/access_logs

{

"index_pattern": "access_logs",

"rollup_index": "access_logs_rollup",

"cron": "*/30 * * * * ?",

"page_size": 1000,

"groups" : {

"date_histogram": {

"field": "datetime",

"interval": "1h",

"delay": "10d"

},

"terms": {

"fields": ["url.keyword"]

}

},

"metrics": [

{

"field": "bytes",

"metrics": ["min", "max", "sum"]

}

]

}The job will not run right away, we have to start it and then it will run automatically, following the “cron” option schedule.

POST /_xpack/rollup/job/access_logs/_startThen, we can see it’s running via the Task API:

GET /_tasks?detailed=true&actions=xpack/rollup*Section intitulée looking-at-the-dataLooking at the data

If you look at our new access_logs_rollup index, we will see documents like this:

{

"_index": "access_logs_rollup",

"_type": "_doc",

"_id": "873201474",

"_score": 1,

"_source": {

"datetime.date_histogram.interval": "1h",

"bytes.max.value": 6958,

"datetime.date_histogram.timestamp": 1528286400000,

"url.keyword.terms.value": "/blog",

"_rollup.version": 1,

"datetime.date_histogram.time_zone": "UTC",

"bytes.sum.value": 12010,

"url.keyword.terms._count": 2,

"bytes.min.value": 5052,

"datetime.date_histogram._count": 2,

"_rollup.id": "access_logs"

}

}They are not supposed to be requested via the _search API, as you can see the names of the fields are not exactly compatible with our raw documents. This will be handled by the new _rollup_search endpoint.

Section intitulée looking-at-the-indexesLooking at the indexes

If we look at our initial raw logs access_logs index, we can see that all the raw logs are still here:

GET _stats?filter_path=indices.access_logs.primaries.docs

{

"indices": {

"access_logs": {

"primaries": {

"docs": {

"count": 1000000,

"deleted": 0

}

}

}

}

}That’s because the Rollup API does not delete the old documents. You will have to clean that up yourself.

That’s the part where I’m not a big fan of the feature: if the goal is to merge old documents into a new index to save space, time and money, why can’t we delete those costly documents automatically?

Section intitulée running-statistics-on-the-dataRunning statistics on the data

Let’s run the query used by the “Bytes per week” visualisation shown earlier:

GET access_logs/_search

{

"size": 0,

"aggs": {

"2": {

"date_histogram": {

"field": "datetime",

"interval": "1d"

},

"aggs": {

"1": {

"sum": {

"field": "bytes"

}

}

}

}

}

}This will answer, for the 6th of June, 27810099 bytes:

{

"1": {

"value": 27810099

},

"key_as_string": "2018-06-06T00:00:00.000Z",

"key": 1528243200000,

"doc_count": 4888

}This query did not read anything from our new Rollup index, it was exactly the same as the one we created via Kibana. To use the new data our Rollup job has created, we have to change the GET access_logs/_search endpoint to a GET access_logs_rollup/_rollup_search endpoint:

{

"1": {

"value": 27810099

},

"key_as_string": "2018-06-06T00:00:00.000Z",

"key": 1528243200000,

"doc_count": 4888

}As you can see, the answer is the same: 27810099 bytes. Again, that is because the raw data is still here so the rolled up one’s are not needed, let’s remove some documents now!

Section intitulée removing-the-old-raw-dataRemoving the old raw data

That’s a tricky part because there is no easy way to be sure a document is rolled-up… But as my Job merges all documents older than 10 days, there is a good chance I can delete documents older than… 11 days2.

POST access_logs/_delete_by_query

{

"query": {

"range": {

"datetime": {

"lte": "now-11d"

}

}

}

}This query will not be run automatically, that’s up to you 😕. On most setups, you will just remove daily indexes instead of running a _delete_by_query but the same fear applies: are your sure the data has been rolled-up?

Some 11 seconds later3, you can run again the query on /access_logs_rollup/_rollup_search to see that even when the raw logs are gone, the aggregation still works! 🤘

{

"1": {

"value": 27810099

},

"key_as_string": "2018-06-06T00:00:00.000Z",

"key": 1528243200000,

"doc_count": 4888

}Looking for fresh data too? You just have to cross-search between live and rolled-up indexes:

GET access_logs,access_logs_rollup/_rollup_search

{

"size": 0,

"aggs": {

"2": {

"date_histogram": {

"field": "datetime",

"interval": "1d"

},

"aggs": {

"1": {

"sum": {

"field": "bytes"

}

}

}

}

}

}And you will get both the old static data and the new raw statistics, merged together.

That’s it:

- We have the same results but only half the documents;

- All we had to change in our queries was the endpoint;

- We can scale, and keep a lot more logs without increasing our costs!

Section intitulée about-the-limitations-of-the-rollup-apiAbout the limitations of the Rollup API

Of course, there is some limitations to this API (they are documented here), for example you will not get any _source back in the responses, it’s focused on statistics applications only.

Also, in my example, I could not have used _rollup_search for the second visualisation (Hits per page) because I did not compute that information inside my initial Job. You will have to be very cautious about what you will need in the merged index.

About the cross-index search, be careful about collision too. In my sample data, I saw some strange results for the exact day limit I used in Delete By Query. I suspect that the rolled-up data are not used if there is data in the buckets from the raw data index because when querying only the rolled-up data, statistics were OK.

Finally, the main issue I may have right now is raw log deletion. I find it hard to let the users deal with this as it can be tricky to remove only what is merged.

To conclude, this feature is very interesting and will simplify a lot of workflows, but right now it’s a bit young. I know that the Elastic team is looking at feedback from users so do not hesitate talk about it, if you have any suggestion!

This article is based on Elasticsearch 6.3.1.

Commentaires et discussions

Nos formations sur ce sujet

Notre expertise est aussi disponible sous forme de formations professionnelles !

Elasticsearch

Indexation et recherche avancée, scalable et rapide avec Elasticsearch

Ces clients ont profité de notre expertise

Afin de soutenir le développement de son trafic, Qobuz a fait appel à JoliCode afin d’optimiser l’infrastructure technique du site et les échanges d’informations entre les composants de la plateforme. Suite à la mise en place de solution favorisant l’asynchronicité et la performance Web côté serveur, nous avons outillé la recherche de performance et…

L’équipe d’Alain Afflelou a choisi JoliCode comme référent technique pour le développement de son nouveau site internet. Ce site web-to-store incarne l’image premium de l’enseigne, met en valeur les collections et offre aux clients de nouvelles expériences et fonctionnalités telles que l’e-réservation, le store locator, le click & collect et l’essayage…

Dans le cadre du renouveau de sa stratégie digitale, Orpi France a fait appel à JoliCode afin de diriger la refonte du site Web orpi.com et l’intégration de nombreux nouveaux services. Pour effectuer cette migration, nous nous sommes appuyés sur une architecture en microservices à l’aide de PHP, Symfony, RabbitMQ, Elasticsearch et Docker.