Navigate through your infrastructure with Docker

This year I wrote several articles about Chef and how to automate your infrastructure and your applications. I also spent some time writing libraries around it, and integrating this tool with all the process and projects of our company.

Sometimes it’s good to step back and look at the larger picture: can we do better and easier?

I read several articles about Docker, tried it for the past weeks and found it amazing. However before going further, I asked myself a simple question:

Section intitulée what-do-we-need-to-automate-an-infrastructure-and-applicationsWhat do we need to automate an infrastructure and applications?

Section intitulée installationInstallation

The first thing, in a new project, is to define then install all the applications and dependencies needed to run it. To automate this part, the script have to ensure that every server own the same application with the same version.

Section intitulée configurationConfiguration

Once installation is completed, let’s configure all the bricks to fit the requirements. This configuration can be divide in three parts:

- Static (or default) configuration which will be the same across all servers: it can be ship with the installation process.

- Dynamic configuration which will be different by the environment (production, staging, development, etc …): for this a configuration management system will be used.

- Infrastructure dynamic configuration, how each applicatif communicate between each others: The next part of this article talk about this, orchestration is your friend.

Section intitulée orchestrationOrchestration

Applications grow and may need severals servers to run it, but only in production: We do not want to have 100 servers when we debug an application in our local environment. Orchestration allow to organize an infrastructure: X servers are needed for our database, X for our frontends, etc.

His role is to launch installation and configuration scripts across all our infrastructure by giving to them the knowledge needed to communicate between each others as he is aware of the global mainframe.

Section intitulée deploymentDeployment

It’s common to automatise the new project deployment through script by using Capistrano, Fabric or other deployment tools. But what is concretely a deployment:

- Installation of an application (by pulling sources or a package);

- Configuration of this application for server and environment;

- Ensure it can communicate with external services.

I think you know where I am going: all those tasks are already treated by the three previous point.

The main difference between this application and the others is the timeline and possibility to rollback, as the Release Early, Release Often principle is not an option.

So deployment of this application can just be done with the previous system just by adding some constraints:

- They need to be fast;

- They need to be rollbacked (and in fast way).

Section intitulée current-stateCurrent State

For some years, a large variety of automation tool emerge. The popular ones have all several things in common:

- They are all builded around the same principle: idempotent, you set the desired state of the server not how to get to this state;

- Do all the things from orchestration to installation and configuration;

- Not OS specific;

- An all new language or api to learn.

These principles are great but bring some drawbacks :

- It’s fast but not as fast as we want: When we configure a system for the first time he can take up to 10 minutes for a medium application. This time is normal and will always be the same despite your tool as the main consuming task is the system, package download, etc …

However when a second run is played, it can take up to 1 minute (and much more on complex infrastructure) and no change is apply: So where do this minute is spent ? Idempotent is in cause, each time a script is run he have to check each step and make sure it’s synchronise with the desired state, it can be fast when doing a file diff, but very slow when checking last version of a package.

Furthermore this script is run on every server whereas it can be only run once and copied diff to other server. It’s always possible to create a system package or a pre-generated VM but it needs to implement many things which can be time consuming and not easy to maintain.

Finally, with this speed it can be very hard sometimes to synchronise a deployment between multiple servers.

- This solutions, with their own large api or framework add a new layer of complexity and investissement, bringing this tools have a cost at the beginning of a project. For sure this cost will be negative at the end, but he can be hard to say to a Product Owner: I have to spent one to two weeks to write scripts for installation and configuration although you can just begin writing business code after few line of linux shell.

- Last thing, it’s never OS independant a script will always have some specific code or configuration if it’s run on Centos or Debian for example. Sure, an api exists to make it independant but you have to keep it in mind when writing a script, even more if you are like us: a service company building many different projects for many different clients and you do not want to rewrite the same things all the time.

Section intitulée docker-an-other-way-of-thinkingDocker, an other way of thinking

Docker emerge in public at the end of march 2013, his main goal is to encapsulate an application and its dependences within a container, with LXC.

To be simple, the application start from a specific state and run commands to be in a new state.

A state can be commit and push to a registry, and all other container in old state can then be pull the new state.

Section intitulée is-it-fastIs it fast?

Yes, it’s very fast ! When pulling a new state no commands are run if it has already been build. It’s just play a file diff, thanks to Aufs, between the old state and the new state. Most of the time, the main factor will be connection speed between the host and the registry.

Section intitulée is-it-complexIs it complex?

Docker use a Dockerfile which explain how to go from state A to state B, and how the container works. See by yourself how to create a jenkins image with it:

FROM ubuntu

MAINTAINER Allan Espinosa "allan.espinosa@outlook.com"

RUN echo deb http://archive.ubuntu.com/ubuntu precise universe >> /etc/apt/sources.list

RUN apt-get update

RUN apt-get install -q -y openjdk-7-jre-headless

ADD http://mirrors.jenkins-ci.org/war/latest/jenkins.war /root/jenkins.war

EXPOSE 8080

CMD ["java", "-jar", "/root/jenkins.war"]Here it starts from a clean state: a fresh, untouched image of ubuntu already available on public Docker registry. Then some commands are runs to have jenkins install (installing dependencies and getting the war).

After that, it say on which port the service will run and what is the command to run a service;

All commands are just shell instructions that everyone know, only a few keywords are needed to create a script and they are very simple. There is a great and short documentation explaining all these keywords.

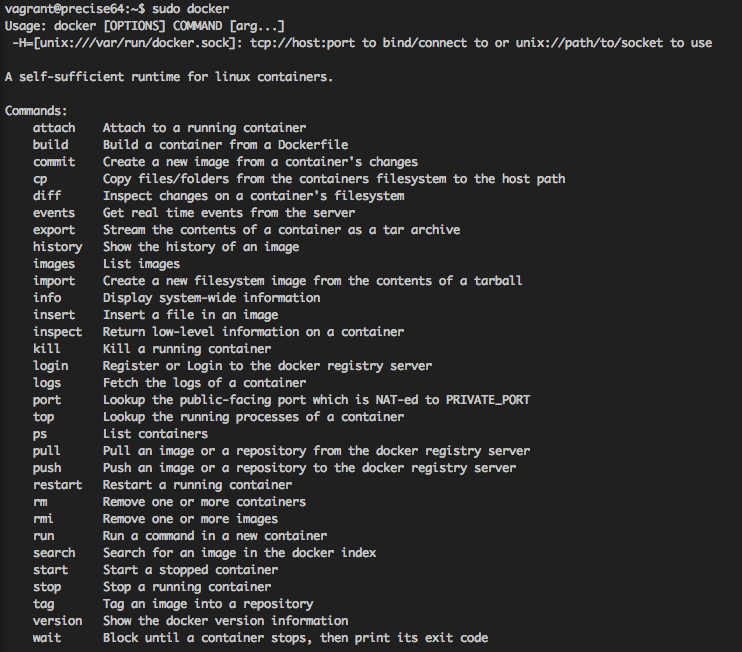

After that the Docker command line can be used to build, push, commit, pull your image

For a great overview and tutorial about Docker there is also a great interactive tutorial.

Docker is that simple, after you create your image you can just reuse it everywhere you need: staging, production, etc …

Section intitulée but-it-s-os-specific-thenBut it’s OS specific then?

Yes and No, a container have to be OS specific, but as long as Docker run on your OS, you don’t have to take care of that. Docker will handle the communication between the os and the container, he only have to know how to expose a service.

A specific OS can be used for each service: the database could run on Centos and backend on Debian, Docker will handle this perfectly.

As they share the same kernel, the only requirements is to have a system built on a unix kernel for all your container and your host.

Section intitulée can-i-orchestrate-my-containers-with-dockerCan I orchestrate my containers with Docker?

Currently, you can’t. This is something that dotCloud (the entreprise behind Docker) wants to add in future version.

Beside that, I like Docker this way, his only role is to install an application and expose it as a service and honestly that’s better: In my view, each functionality in a application add a layer of complexity, less functionality = better existing one.

For orcherstration, I recommend to take a look at the promising project Flynn or Deis.

Section intitulée so-we-drop-chef-puppet-and-rewrote-all-our-servers-with-dockerSo we drop chef, puppet, … and rewrote all our servers with docker?

Certainly not. Docker is young and even the core contributors say it should not be use for production. Even if pretty huge companies already use it, for production and continuous integration as it’s fast to boot in a fresh and prepared state. Beside that, for very large complex system Chef and Puppet are for the moment better than Docker, for the moment some tools moving around are missing to make him even more greater.

But things are going fast, keep it mind, start playing with it and go take a look. For new people who begin with automatisation: skip Chef, Puppet, … and go directly to Docker, you will not loose your time.

Section intitulée can-i-use-both-togetherCan I use both together?

If you start a new project with Docker I will not recommend you to do so as this will be an overkill having multiple programs capable of doing the same things. At best you can use Chef as an orchestrator for your servers like Deis does.

But don’t and never use them inside your Dockerfile (don’t throw a chef-client or a puppet apply in it). As you will never be sure this will produce twice the same commands and commits, and can end in unexpected result. The only reason to do that is if you have many chef scripts and want a smooth transition when switching to Docker.

Section intitulée some-tips-final-wordsSome tips, Final words

Docker is meant to be scalable, and is very good at it, an image must always be writing in this view. If a script need to have multiple service inside a container to make it scalable: use supervisord, it’s currently the common approach for this case.

If you never try Docker and got some minutes, go for it, seriously!

Commentaires et discussions

Ces clients ont profité de notre expertise

Dans le cadre de notre partenariat avec Carb0n, nous avons réalisé un examen complet de leur infrastructure, allant de l’environnement de production, jusqu’à au code de l’application. Nous avons fourni des recommandations stratégiques pour son amélioration. Notre intervention a notamment couvert : Évaluation et renforcement de la sécurité sur AWS, …

Dans le cadre du renouveau de sa stratégie digitale, Orpi France a fait appel à JoliCode afin de diriger la refonte du site Web orpi.com et l’intégration de nombreux nouveaux services. Pour effectuer cette migration, nous nous sommes appuyés sur une architecture en microservices à l’aide de PHP, Symfony, RabbitMQ, Elasticsearch et Docker.

LOOK Cycle bénéficie désormais d’une nouvelle plateforme eCommerce disponible sur 70 pays et 5 langues. La base technique modulaire de Sylius permet de répondre aux exigences de LOOK Cycle en terme de catalogue, produits, tunnel d’achat, commandes, expéditions, gestion des clients et des expéditions.