Scaling the Symfony Demo app to the extreme with Varnish

This post is the transcript of the talk “Feedback on our use of Varnish” given at SymfonyWorld 2021 Summer Edition.

We are going to talk about scalability, keeping high performance and a fast website when traffic increases a lot without compromising on functionality.

The Symfony demo app is a basic web application showing a lot of features of Symfony and so, a good candidate for this presentation. We are going to scale it from a few users to ten of thousands of concurrent users.

I will explain problems we faced, solutions taken and how we ended up with our current architecture with performance in mind. I will show how Varnish is our central piece for performance.

There are thousands of other solutions, from static websites to big CDN. But I want to keep control of everything and code like we do everyday.

Disclaimer: we will not talk about DNS, SSL resolution, apache/nginx or SQL optimization. This talk is not a deep dive but more a step by step of different architectures, for inspiration.

Section intitulée how-toHow-to

We followed the best practices given by Symfony. We have opcache, preload, and warmed up cache in the production environment.

Monitoring your infrastructure is essential. You need to know what happens. If you have to guess, I can assure you that you will be surprised more than you know. I stopped counting the times where the performance blocker was a simple mistake that caused big issues and nobody expected it.

We have tools like Blackfire or xdebug for profiling the code. For the infrastructure, it’s more complicated because of the number of components. It can be expensive, but a slow or crashed website will cost you more in the long run. You can crash because of the bandwidth to Redis, because of too many open connections to a service, etc.

I use New Relic a lot, Datadog and now Blackfire monitor.

Monitoring means:

- aggregate all your logs;

- get traces;

- add business metrics (everything is in 200 but no sales in the last hours?);

- load benchmark.

For the sake of this talk, we will mainly focus on the number of requests per second we can handle, at which response time and at what cost. What slows you down?

Section intitulée 01-typical-web-architecture01. Typical Web architecture

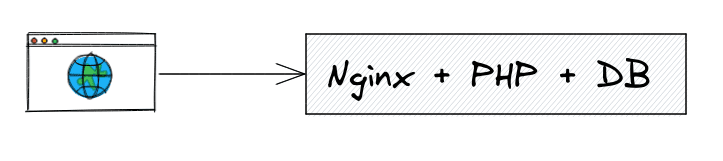

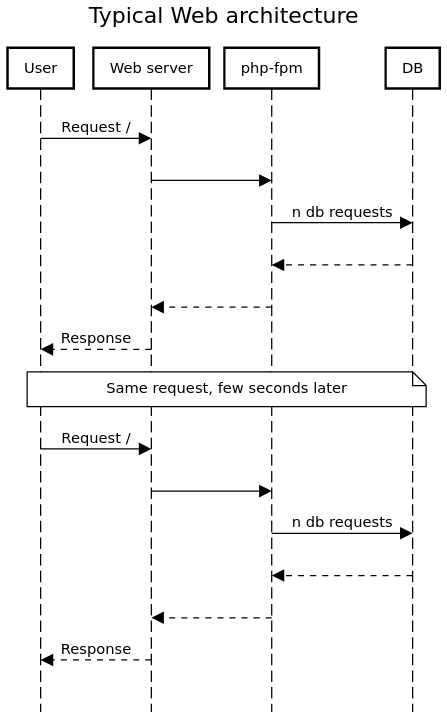

Let’s begin with the typical Web architecture, a Web server, nginx here (but the illustration would look the same with apache), php-fpm and a database. Everything is on a shared server.

The controller of the demo app endpoint handles pagination, filters per tag, calls the database and does templating via Twig.

public function index(Request $request, int $page, string $_format, PostRepository $posts, TagRepository $tags): Response

{

$tag = null;

if ($request->query->has('tag')) {

$tag = $tags->findOneBy(['name' => $request->query->get('tag')]);

}

$latestPosts = $posts->findLatest($page, $tag);

// Every template name also has two extensions that specify the format and

// engine for that template.

// See https://symfony.com/doc/current/templates.html#template-naming

return $this->render('blog/index.'.$_format.'.twig', [

'paginator' => $latestPosts,

'tagName' => $tag ? $tag->getName() : null,

]);

}We do not cache data or the response. Requesting the same page again will trigger the same computation. From user to PHP to database, and back, each time.

Performance degrades with traffic, there is no scalability.

We could scale vertically by using a bigger and more expensive server, but there is a limit to that and it’s a single point of failure.

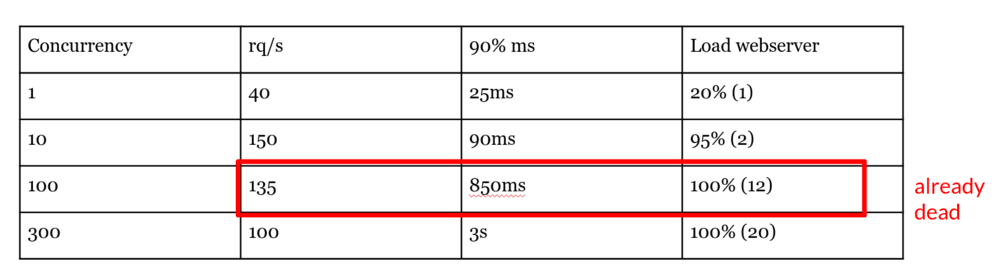

Section intitulée 02-typical-web-architecture-high-traffic02. Typical Web architecture – high traffic

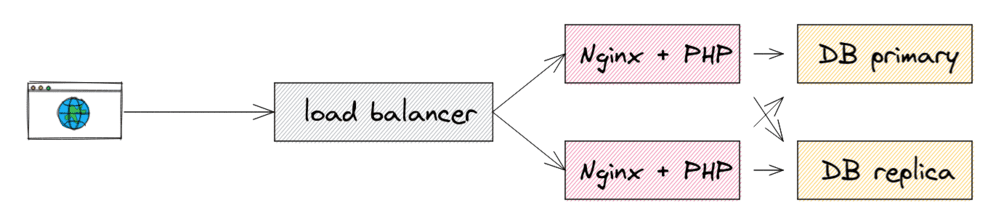

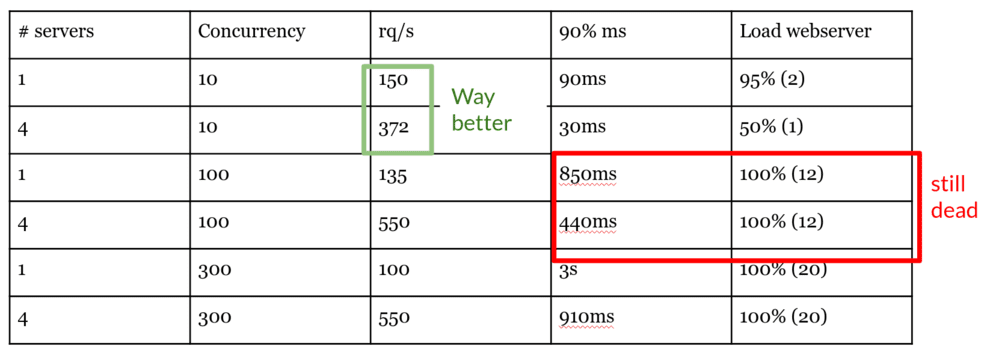

We can scale horizontally by splitting services and by adding more Web servers and more database replicas.

You want at least two of everything. You don’t want a single point of failure, you want redundancy.

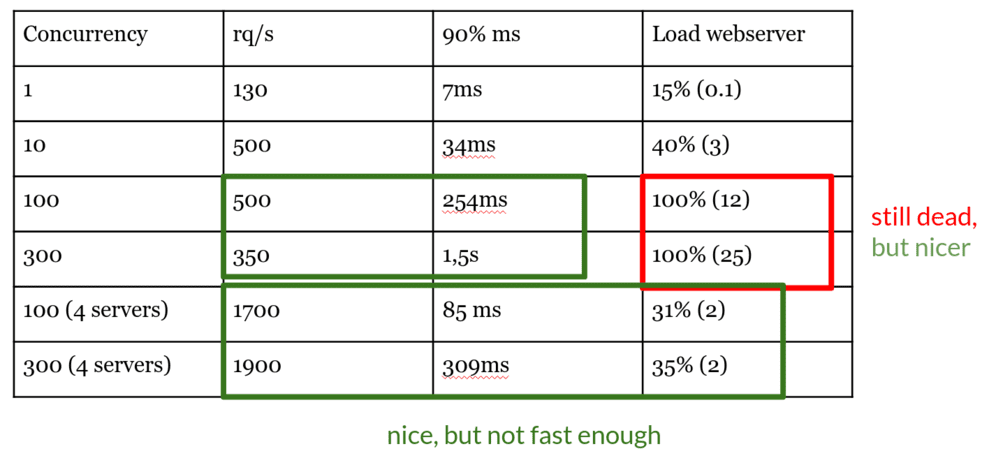

We see that performance and scalability improve as we throw more servers at it. Here I forgot to mention that the database server is a monster that costs a lot. A cheap database server would have been overloaded with our 4 web servers.

It’s still not fast enough to compute something that has already been computed and it’s a waste of resources. This waste is also bad for the environment.

Section intitulée 03-typical-web-architecture-with-app-cache03. Typical web architecture with app cache

So we want to avoid expensive code to be run on each request. We can use an app cache with a backend like Redis or memcache. It allows us to compute less and reduce pressure on the database.

Let’s see how we can do it and show you that you need to be careful because handling cache can be tricky.

public function index(Request $request, int $page, string $_format, PostRepository $posts, TagRepository $tags): Response

{

$tag = null;

if ($request->query->has('tag')) {

$tag = $tags->findOneBy(['name' => $request->query->get('tag')]);

}

$latestPosts = $posts->findLatest($page, $tag);

// Every template name also has two extensions that specify the format and

// engine for that template.

// See https://symfony.com/doc/current/templates.html#template-naming

return $this->render('blog/index.'.$_format.'.twig', [

'paginator' => $latestPosts,

'tagName' => $tag ? $tag->getName() : null,

]);

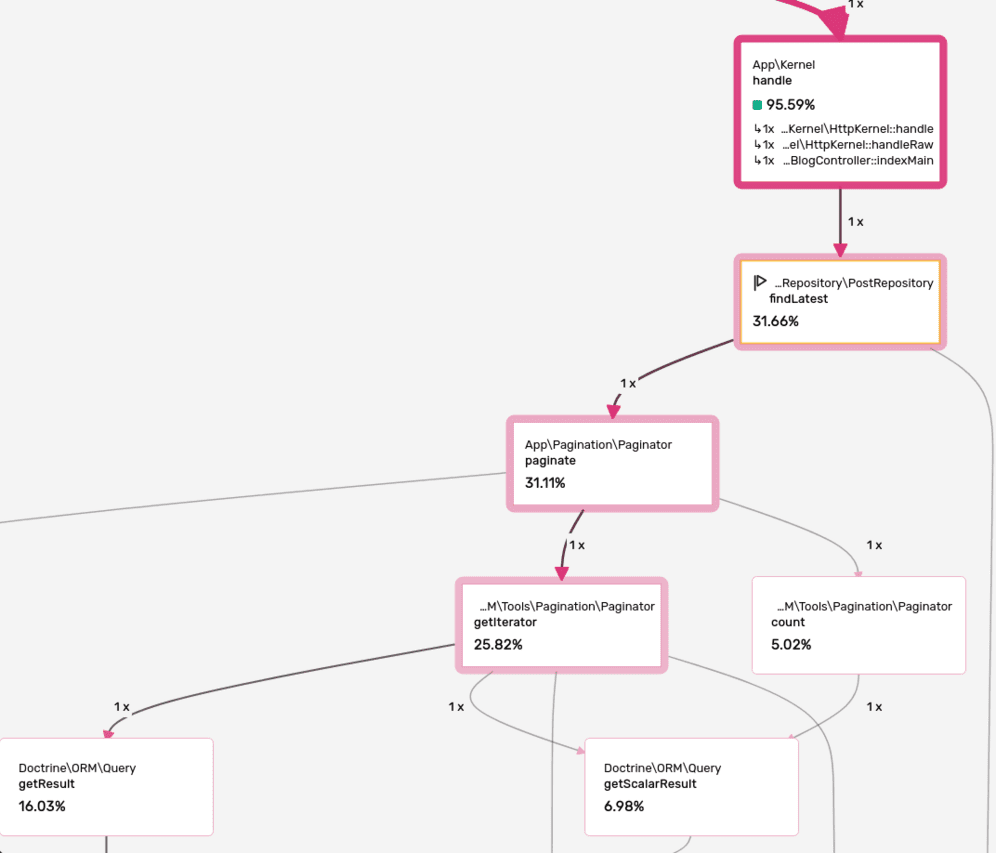

}Remember the previous code? Let’s use my good friend Blackfire to analyses that.

The findLatest() method represents 32% of the total time and 100% of the requests to the database. Let’s cache that by using the Symfony cache PSR-6 implementation with a Redis backend.

framework:

cache:

# Put the unique name of your app here: the prefix seed

# is used to compute stable namespaces for cache keys.

#prefix_seed: your_vendor_name/app_name

# Redis

app: cache.adapter.redis

default_redis_provider: '%env(REDIS_CACHE_DSN)%'public function index(Request $request, int $page, string $_format, PostRepository $posts, TagRepository $tags, AdapterInterface $cacheApp): Response

{

$tag = null;

if ($request->query->has('tag')) {

$tag = $tags->findOneBy(['name' => $request->query->get('tag')]);

}

$cacheKey = 'posts';

$cacheItem = $cacheApp->getItem($cacheKey);

if ($cacheItem->isHit()) {

$latestPosts = $cacheItem->get();

} else {

$latestPosts = $posts->findLatest($page, $tag);

$cacheItem->set($latestPosts);

$cacheItem->expiresAfter(20);

$cacheApp->save($cacheItem);

}

return $this->render('blog/index.'.$_format.'.twig', [

'paginator' => $latestPosts,

'tagName' => $tag ? $tag->getName() : null,

]);

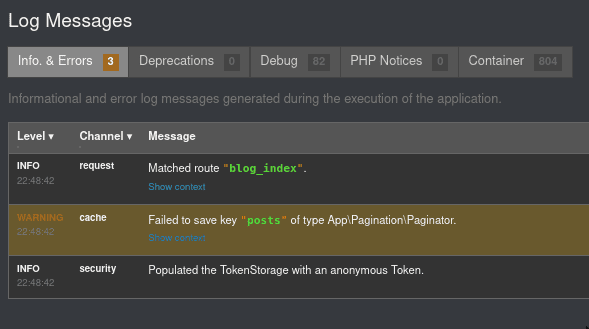

}But we see no improvement whatsoever, this is strange. Let’s look at the logs.

Okayyy, the cache is never saved, so of course there is no improvement. The paginator is an iterator with a closure. It’s not serializable and so not cacheable. I can say that I’m not that happy that this failure is silent.

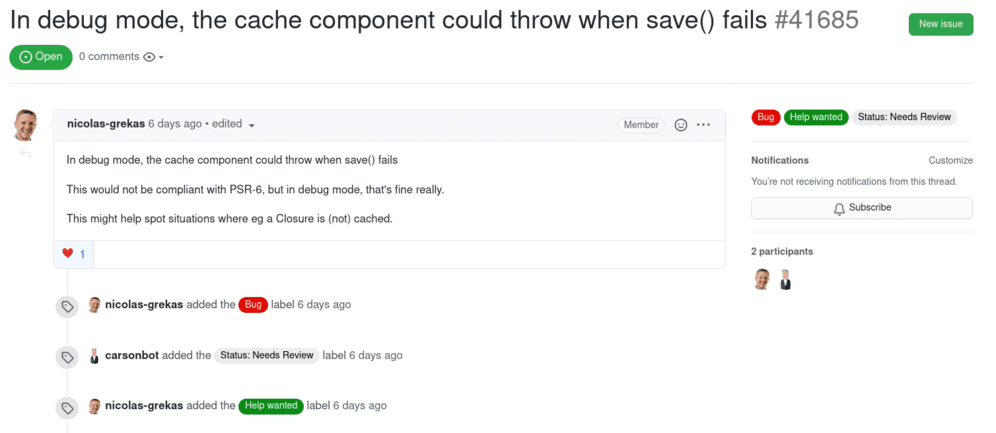

… after reviewing this talk, Nicolas Grekas created the ticket to throw an exception and do not fail silently.

findLatest() is not a good candidate for caching after all.

Let’s cache the response object itself.

public function index(Request $request, int $page, string $_format, PostRepository $posts, TagRepository $tags, AdapterInterface $cacheApp): Response

{

$tag = null;

if ($request->query->has('tag')) {

$tag = $tags->findOneBy(['name' => $request->query->get('tag')]);

}

$cacheKey = 'posts';

$cacheItem = $cacheApp->getItem($cacheKey);

if ($cacheItem->isHit()) {

$response = $cacheItem->get();

} else {

$latestPosts = $posts->findLatest($page, $tag);

$response = $this->render('blog/index.'.$_format.'.twig', [

'paginator' => $latestPosts,

'tagName' => $tag ? $tag->getName() : null,

]);

$cacheItem->set($response);

$cacheItem->expiresAfter(20);

$cacheApp->save($cacheItem);

}

return $response;

}It works! But do you see the new issue?

… same cache whether we have a tag or not. So we need to use the tag in the cache key.

PHP returns faster since there is less to compute. It can handle more traffic, but it’s still not fast enough when we have serious concurrency.

What happens when a post is added or deleted? The cache is still there and your action will not be visible. We need to handle the lifetime of this cache. And you know the famous citation from Phil Karlton, “There are only two hard things in Computer Science: cache invalidation and naming things.”

Symfony is here and gives us all the tooling to handle that cache lifetime, from setting time to live, to cache invalidation.

Here we force the cache purge on delete, it is synchronous and the next user will compute the cache:

public function delete(Request $request, Post $post, AdapterInterface $cacheApp): Response

{

…

$this->clearCache($cacheApp);

$this->addFlash('success', 'post.deleted_successfully');

return $this->redirectToRoute('admin_post_index');

}

protected function clearCache(AdapterInterface $cacheApp): void

{

$cacheApp->clear();

}Here, we set the cache to 20s. A small cache lifetime like that allows to have a nice concurrency if you have it, but if you have no traffic, your user will have a cache miss all the time.

public function index(Request $request, int $page, string $_format, PostRepository $posts, TagRepository $tags, AdapterInterface $cacheApp): Response

{

…

$cacheItem->set($response);

$cacheItem->expiresAfter(20);

$cacheApp->save($cacheItem);

…

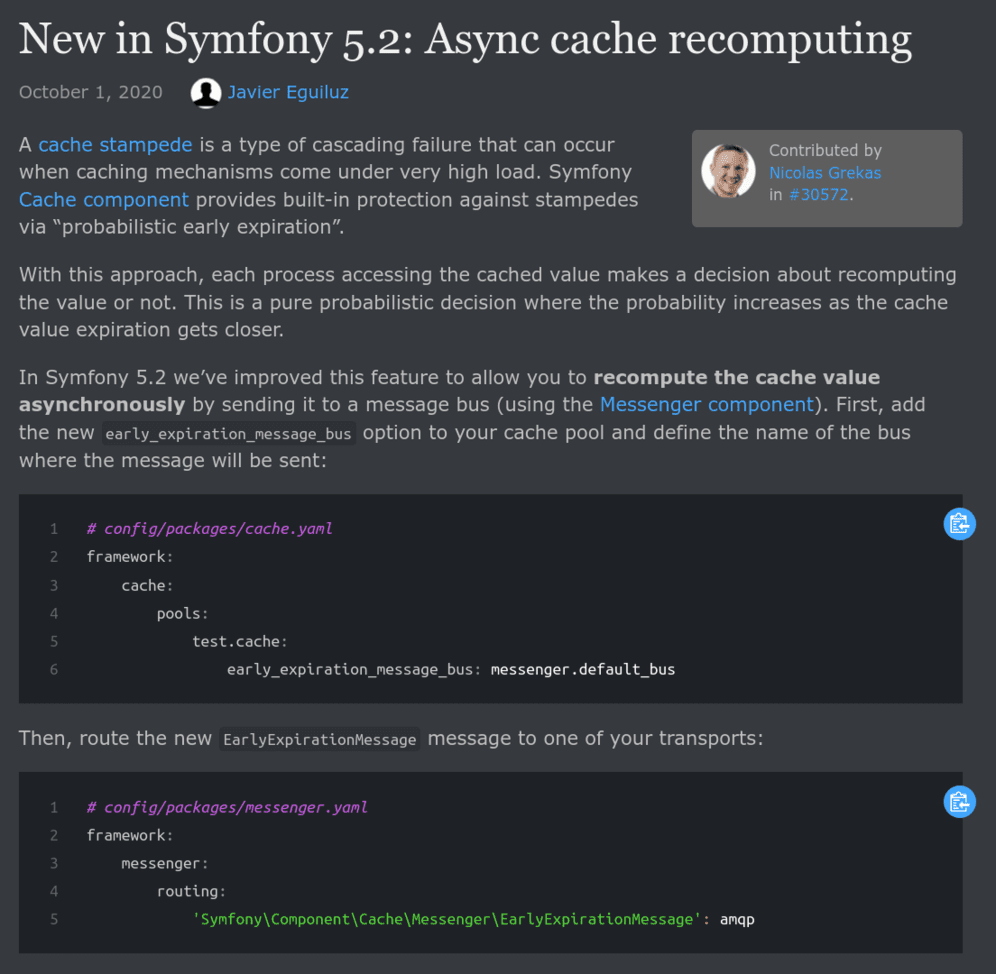

}You may have heard of the new feature to use messenger to recompute cached data before being cleared (demo).

I will let you investigate as I use something else.

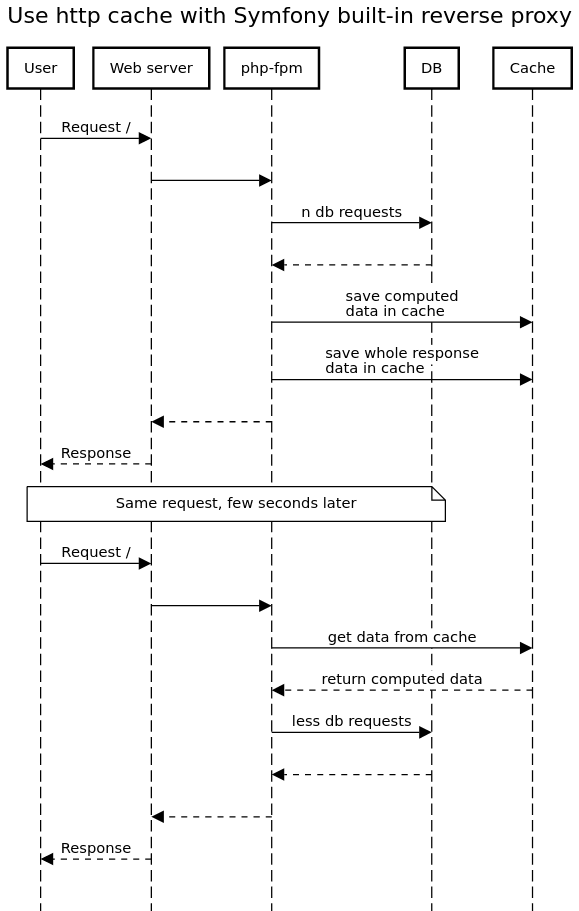

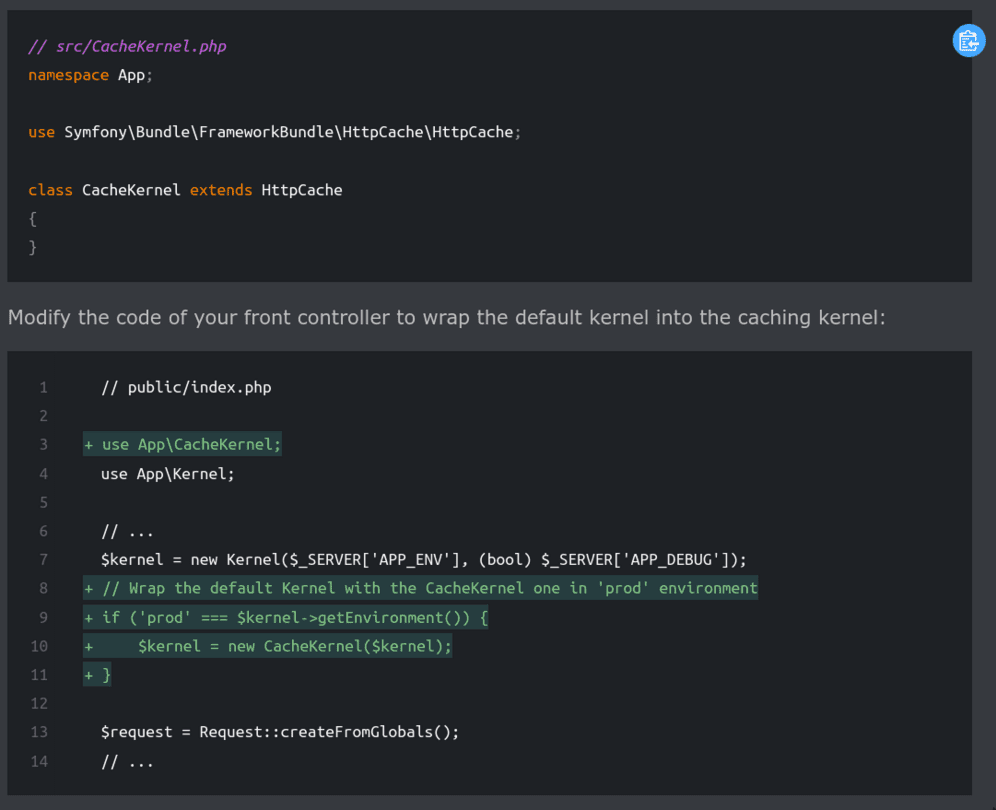

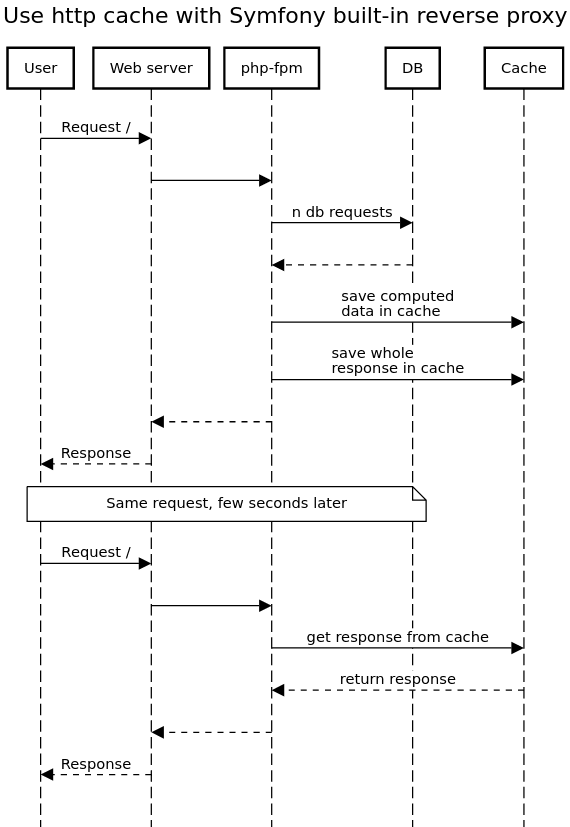

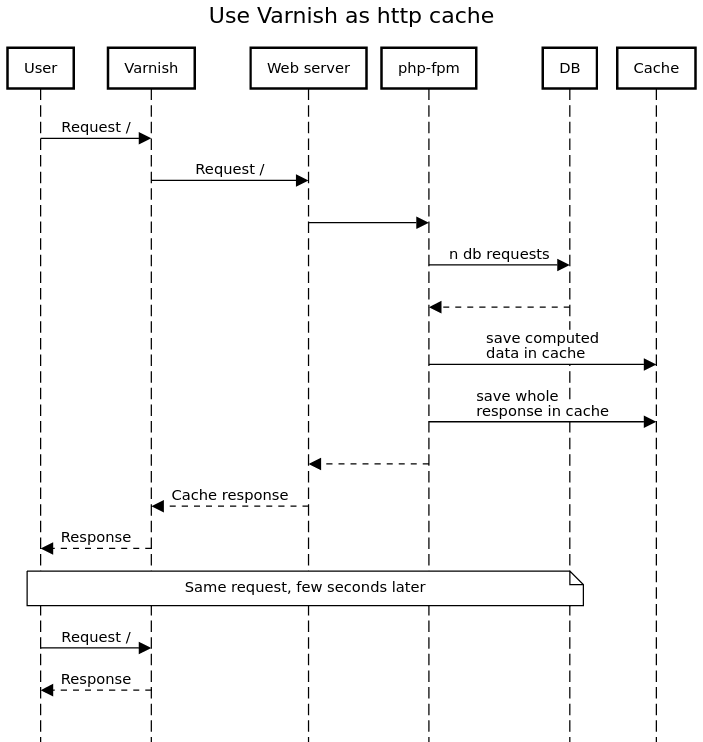

Section intitulée 04-use-http-cache-with-symfony-built-in-reverse-proxy04. Use HTTP cache with Symfony built-in reverse proxy

Instead of storing the response in Redis, we can use a proxy that is before the actual application so Symfony does not even need to start up on a cache hit.

Symfony gives us an included reverse proxy which allows faster response because the bootstrap is many times lighter than that of the regular kernel. And it handles the difference between having a tag parameter or not by itself.

To be able to use HTTP cache we need to amend the response with new cache headers. The response is now public and cacheable for one hour.

public function index(Request $request, int $page, string $_format, PostRepository $posts, TagRepository $tags, AdapterInterface $cacheApp): Response

{

$response ='…';

$response->setPublic();

$response->setSharedMaxAge(3600);

$response->headers->set(AbstractSessionListener::NO_AUTO_CACHE_CONTROL_HEADER, 'true');

return $response;

}Modify the code of your front controller to wrap the default kernel into the caching kernel:

I showed you the risks of caching. Having two layers of cache is more than twice the risk. Invalidation should happen in cascade with data first because otherwise we http-cache old data and it can become a nightmare to handle. So we remove the Redis cache in favor of this one.

We begin to have very very nice performance, but we still end up with a saturated server.

A very very nice feature allowed by HTTP cache is Edge Side Include or ESI, that allows you to separate cache lifetime for different fragments of the page. Here the sidebar is cached for longer than the page itself and more importantly is shared across ALL pages.

A blog post can be cached for days but the section comments cleared when an event happens. You prevent recomputing the whole page at each event. ESI with Symfony reverse proxy happens in the same FPM process.

Always the same issue with cache invalidation but i will explain later.

It’s fast but we still share the fpm process with the one that computes the response. We can do better.

Section intitulée 05-use-http-cache-with-a-dedicated-reverse-proxy-varnish05. Use HTTP cache with a dedicated reverse proxy: Varnish

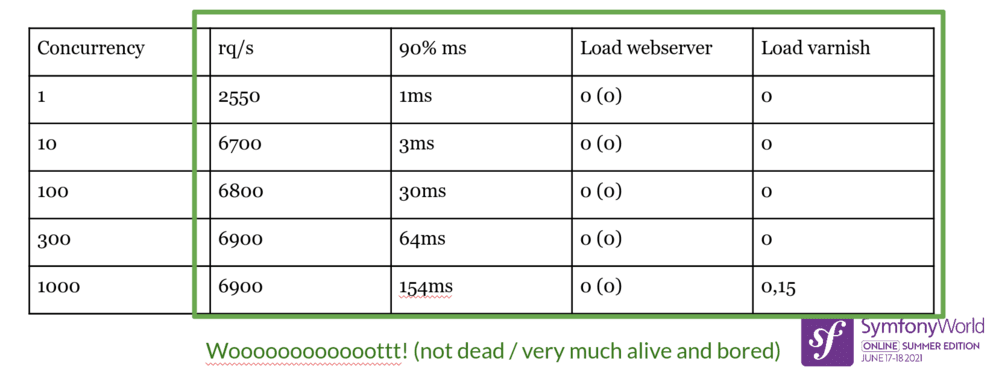

Varnish does the same thing as the built-in reverse proxy, but with better support for the HTTP protocol, faster and with next to 0 CPU usage. A typical cached response is returned in less than 1ms at almost 0 cost.

Varnish tries also to avoid the same concurrent request to the backend.

With a true reverse proxy, numbers are something else. We got stellar response time, astonishing concurrency for an absolutely inexisting load. We can clearly say that servers are totally sleeping.

Varnish is VERY hard to benchmark, it just doesn’t care.

If you haven’t used something like Varnish or squid before you should begin to understand what kind of power it gives us. To use it, we haven’t added anything besides installing it and setting the correct cache HTTP headers in the response.

But with great powers comes great responsibility. Varnish is as tricky as it is powerful. Over the years of using it, even if we tend to use almost the same configuration, we’ve learned a couple of things the hard way. I wanted to share some of those lessons with you today.

What should you know?

- Varnish keeps everything in memory, that explains the speed;

- It is programmable with a language called VCL. It is kind of NOT nice: it’s very limited and has a lot of quirks;

- Varnish’s grace mode is a joy of itself, it provides the same thing as the messenger call to recompute the cache before expiring it, but transparently, with nothing to do;

- Varnish is able to hot reload, so you can change its configuration without losing cached data (but sometimes it will be necessary, if you change hash for example);

- ESI with Varnish triggers a whole separate request for the web server and php-fpm, you do not want to have too many ESI per page.

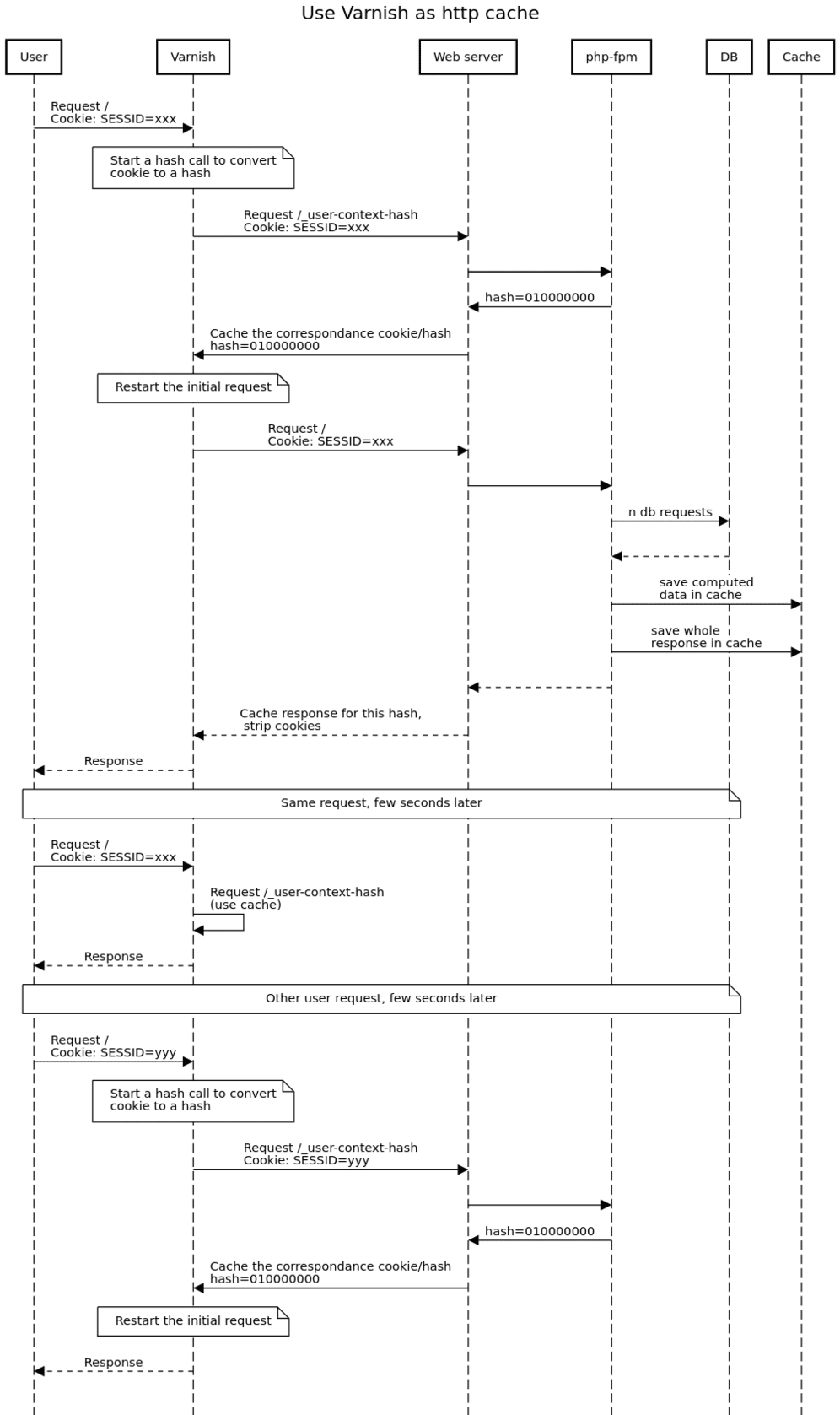

Section intitulée 06-http-cache-with-authenticated-traffic06. HTTP cache with authenticated traffic

By default, cookies or authorization header will prevent reverse proxies from caching at all. This is a security feature to prevent serving private content to someone else.

sub vcl_recv {

if (req.http.Authorization) {

# Not cacheable by default.

return (pass);

}

if (req.http.cookie) {

# Not cacheable by default.

return (pass);

}

// …

}From the moment you start a session, you will never have cache. It becomes an issue the moment the user begins to interact with your project as it gets no cache.

It also means no form with CSRF token. That can be an issue.

That’s the reason why for that case we tend to prefer app cache over HTTP cache.

But of course it’s a behavior we can change and with some tweaks, we can have the performance of Varnish with authenticated users, as long as the content is free to share.

First, clean all cookies we do not want.

sub vcl_recv {

// Remove all cookies except the session ID.

if (req.http.Cookie) {

set req.http.Cookie = ";" + req.http.Cookie;

set req.http.Cookie = regsuball(req.http.Cookie, "; +", ";");

set req.http.Cookie = regsuball(req.http.Cookie, ";(PHPSESSID)=", "; \1=");

set req.http.Cookie = regsuball(req.http.Cookie, ";[^ ][^;]*", "");

set req.http.Cookie = regsuball(req.http.Cookie, "^[; ]+|[; ]+$", "");

if (req.http.Cookie == "") {

// If there are no more cookies, remove the header to get page cached.

unset req.http.Cookie;

}

}

}Yes the VCL is not nice.

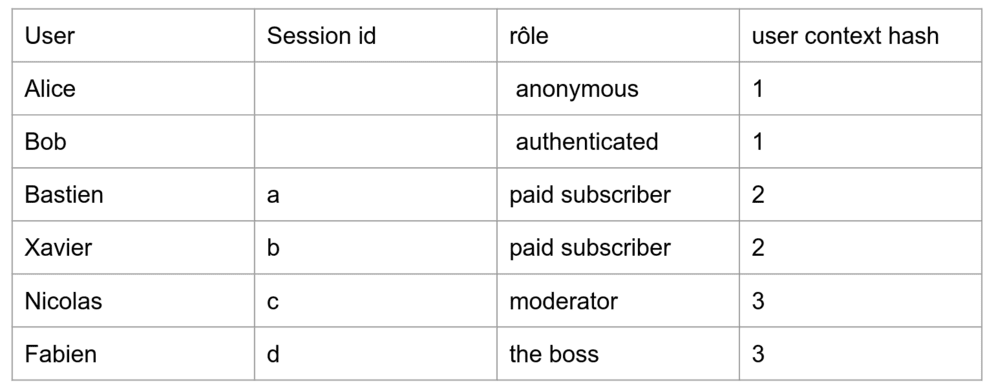

Then, we can use something called “user context hash” that assigns you a “context hash” that corresponds to the cache key of the HTTP cache. For example, anonymous and authenticated “not premium” users share the same cache, paid subscribers another one and moderators another one. You build only 3 versions of the same page, no matter the number of users in each group. It works very well for a news or an e-commerce website for example.

You can also use ESI for user related content in a user context content. We could have used an ajax call cached per user, but we put in a JSON array directly in the cached ESI.

To install it we need a few things:

1/ paste some code in the VCL config, restart it

2/ add FosHttpCacheBundle, with composer of course:

composer require friendsofsymfony/http-cache-bundle guzzlehttp/psr7 php-http/guzzle6-adapter3/ enable the “user context hash” feature

fos_http_cache:

# ref https://foshttpcachebundle.readthedocs.io/en/latest/reference/configuration/tags.html

tags:

enabled: true

response_header: 'Cache-Tags'

# ref https://foshttpcachebundle.readthedocs.io/en/latest/reference/configuration/user-context.html

user_context:

enabled: true

role_provider: true

user_hash_header: 'X-User-Context-Hash'

hash_cache_ttl: 1800

match:

method: 'GET'

accept: 'application/vnd.fos.user-context-hash'To conclude on user context hash:

- user context hash is for content groupable by something like role (think paywall);

- user context hash changes everything for an authenticated website. I’ve seen so much websites with no HTTP cache because of cookies and session;

- the user context hash should be very very fast and cached.

Section intitulée 07-varnish-invalidate07. Varnish: invalidate

We already talked about cache invalidation, as one of the two hardest things in computer science. But of course, Symfony as a framework is here to help us.

Performance : Sometimes you prefer to respond a possibly erroneous version of the page but fast. Accuracy : Sometimes you prefer the user to wait for a fresh page, even if he has to wait.

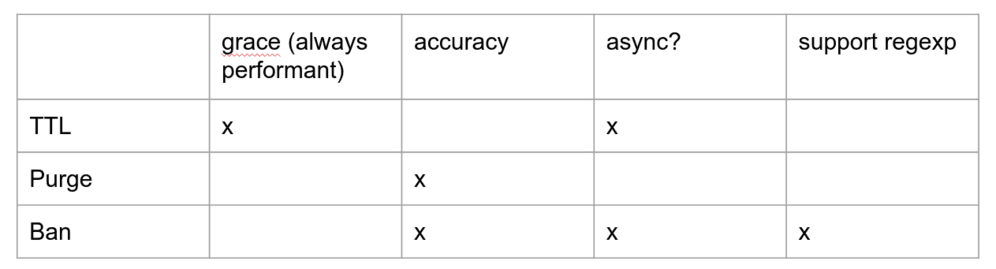

We have a lot of mechanisms at our disposal.

With ttl we have grace mode, the user that first calls an expired page will get the outdated page instantly while varnish makes an asynchronous request to the backend to replace the cached page. Even a small ttl is better than no cache.

Purge removes the concerned urls from cache synchronously.

Ban is async, add the ban to a list. Every cached content has a pointer to the last seen element of the ban list. When fetching from the cache, it compares the cached data to all the new bans. There is no grace mode: the next user request will hit the backend, something we want to avoid if possible. It supports regexp. A cache lurker cleans cold content asynchronously when varnish is idle.

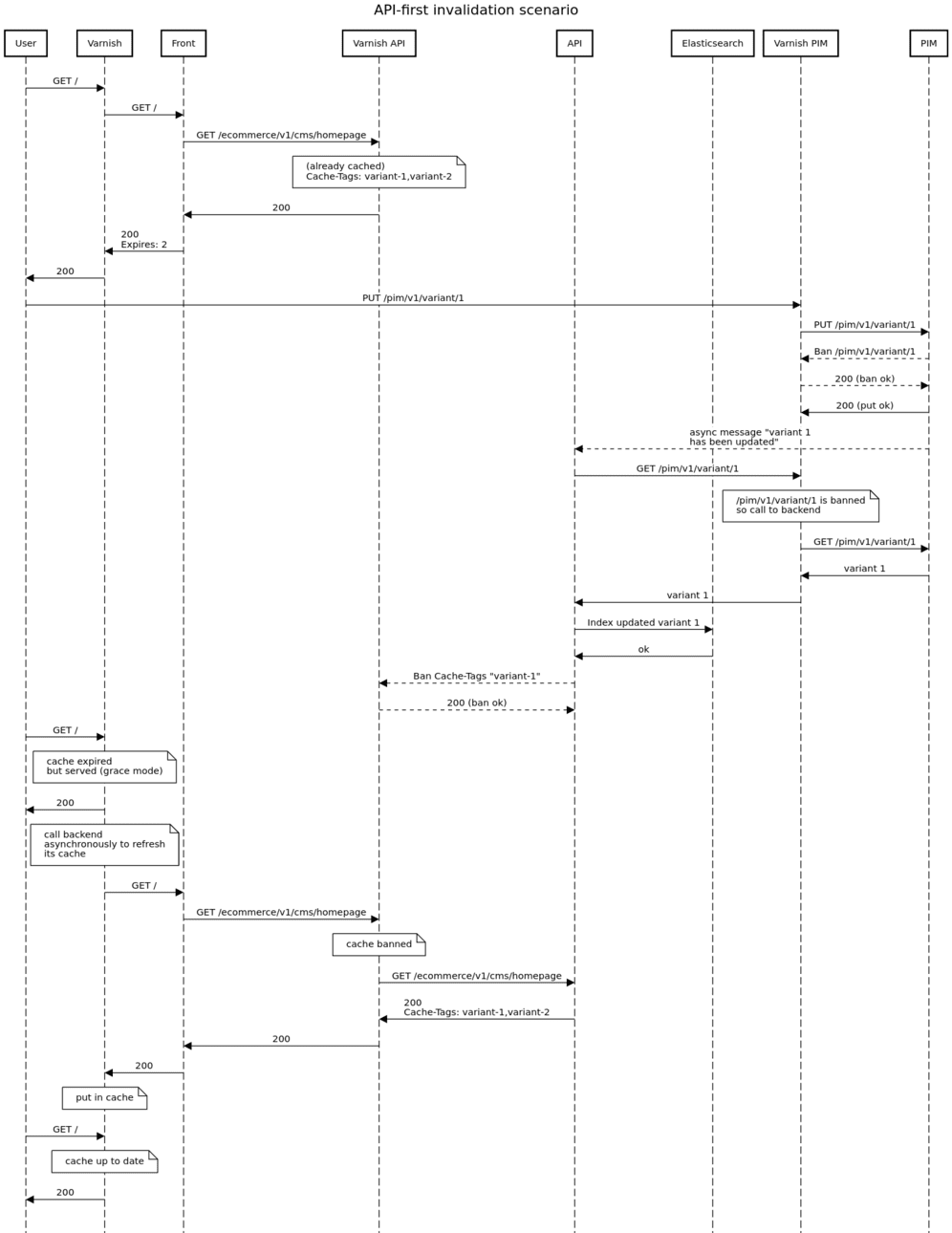

Section intitulée 08-complicate-everything-api-first08. Complicate everything: API first

Imagine our blog has vastly grown and has now a very popular shop. It’s developed API first with all product info in a PIM.

The homepage is already computed by the API and cached in varnish API. The front used that cached data, rendered it in HTML with twig. The end result is cached in the varnish front.

What happens when you update data in the PIM?

It invalidates the varnish PIM for this variant and then the PIM called the API, via a message queue to inform the variant 1 has been updated.

The API asks for the fresh data to the PIM and deal with it. It also invalidates all the API cached responses that included the variant 1, thanks to the cache tags.

Then the user asks again for the homepage. Despite being out of date, varnish instantly returns the old version and asks for the new one asynchronously and caches it.

The data is cold in varnish API and the API is called to compute the homepage. it returns it and varnish API caches it.

Then the user asks again for the homepage, he gets the new one.

No user gets to wait and the response is now always fast.

Section intitulée 09-varnish-multi-zone09. Varnish multi zone

We have some varnish in Europe, USA and China. We do not use a CDN for that because we have a lot of logic handled by Varnish, not feasible with the configuration available on a CDN.

We do not directly call varnish to invalidate, we developed a lambda to handle these invalidation on decoupled varnish servers.

Section intitulée more-caveats-while-scalingMore caveats while scaling

I made this talk today because I had issues with the scaling of this infrastructure and I wanted to share some with you, because battle stories have always been my favorites.

Everything begins with monitoring.

Section intitulée consistent-query-string-keys-orderConsistent query string keys order

I discovered by analysing the access logs that some API URL was called six times where I expected 2 (one for each varnish server).

We have three API clients:

- android : encoding 1, alpha order;

- ios : encoding 2, alpha order;

- Web : encoding 1, random order.

It means that for each URL, we compute everything three times. We control all the clients so we change there, but we could have used a Varnish module made by the nytimes.

Section intitulée crash-redisCrash Redis

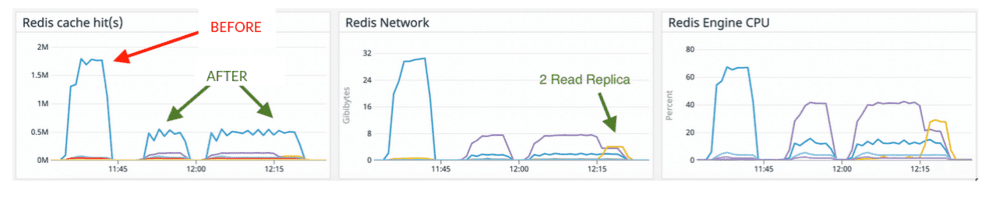

We have the extreme honor to have crashed an enormous Redis cache.

We had a key used for storing a computed array. That array was in append mode only, no replacement. The key increased in size over and over, resulting in exceeding Redis’s bandwidth and 100% CPU usage.

Site crashed. Because we cached. Badly.

Monitor your bandwidth and Redis engine CPU.

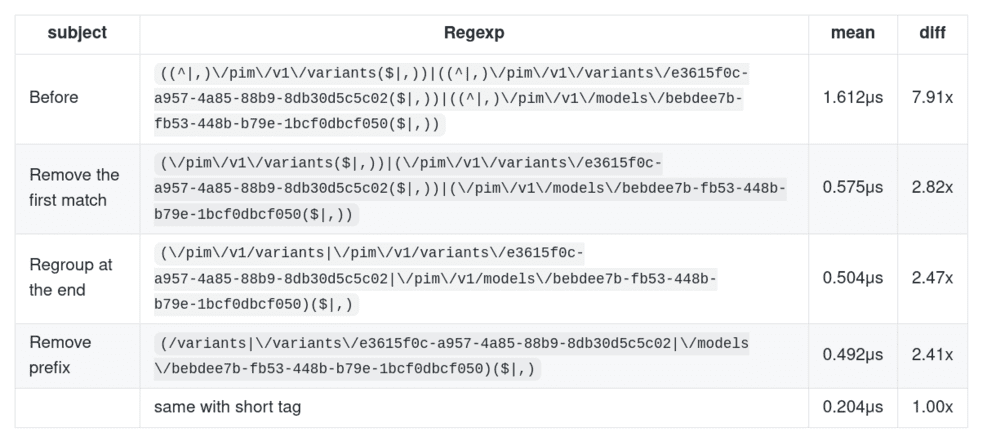

Section intitulée ban-tags-with-regexBan tags with regex

On a Varnish dashboard we correlated the number of bans with a heavy load on Varnish. It seems normal, we ask Varnish to do something, it costs CPU.

But one day, I played with the regexp and discovered that we can do 10 times better.

As both PHP and Varnish uses PCRE as regexp engine I used lyrixx/php-bench to test the cost of different scenario.

Now varnish is always sleeping, even with a lot of bans.

I used this knowledge to improve the tag handling in API Platform and FOShttpCache.

Section intitulée user-context-hashUser context hash.

We use Varnish and user context hash on very high traffic websites. And we experienced various performance and stability issues that I wanted to share with you. All credits for the findings don’t belong to me but to my client and their discussion with Varnish support. I think it’s important enough to share it here.

User context hash is based on a new header that we vary on. This header itself is calculated on the /_fos_user_context_hash call that itself varies by cookie or authorization header.

If we use user context hash, it’s because we want the cardinality of a user context page to be as low as possible to avoid pointless computation.

But the cardinality of the /_fos_user_context_hash is linked to the number of visitors, and it can be very big. We rely on the Vary header to make the distinction and find all the cached variants.

The vary header is like a SQL request without an indexed field, against a possible enormous table if you have traffic like this one. To fix it we just need to index this field.

sub vcl_hash {

if (req.url == "/_fos_user_context_hash") {

hash_data(req.http.<Cookie or Authorization>);

}

# VS: NOTE: No return, fallthrough to builtin VCL

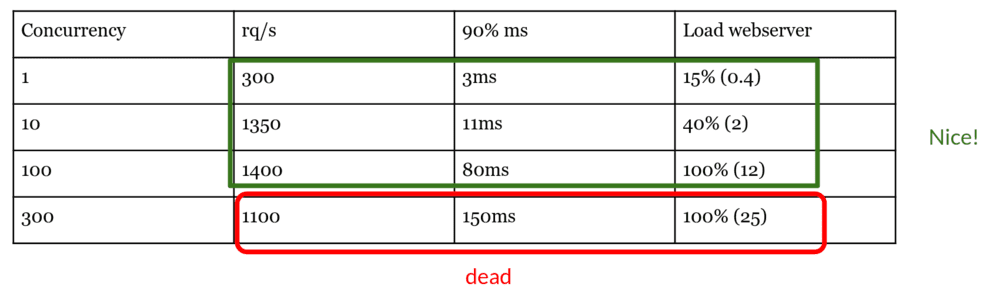

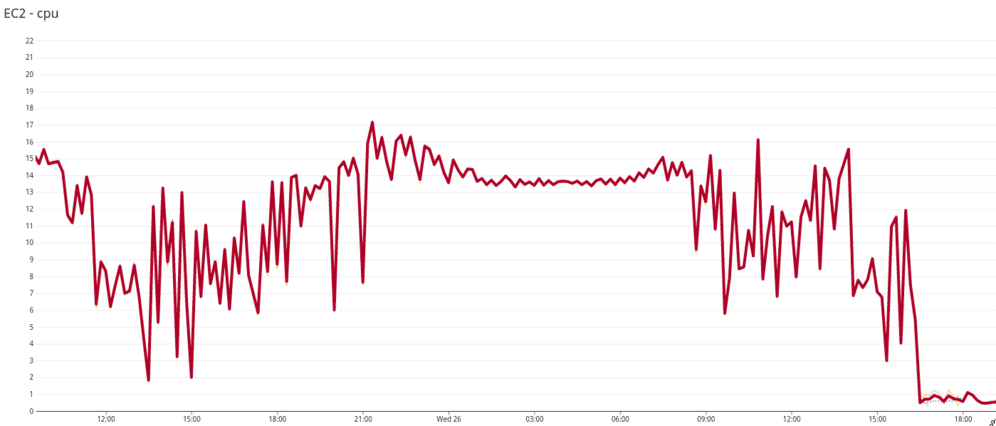

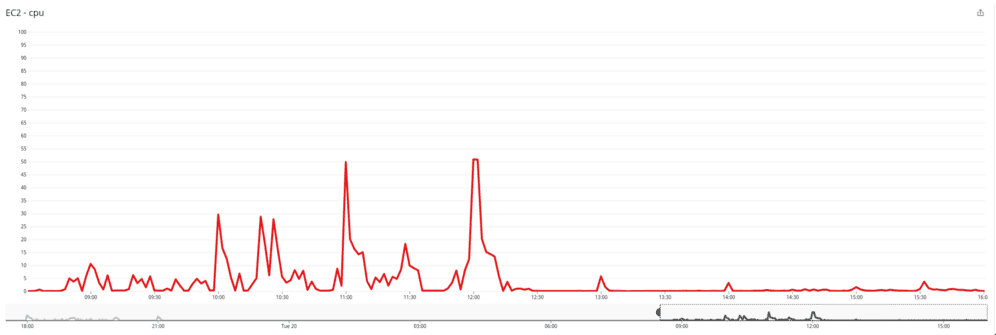

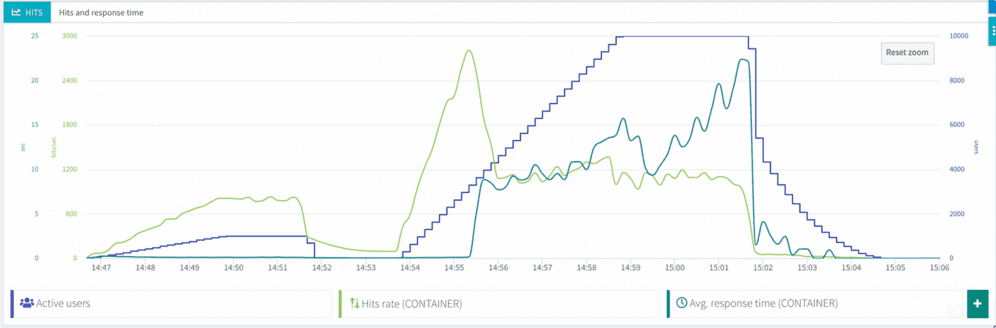

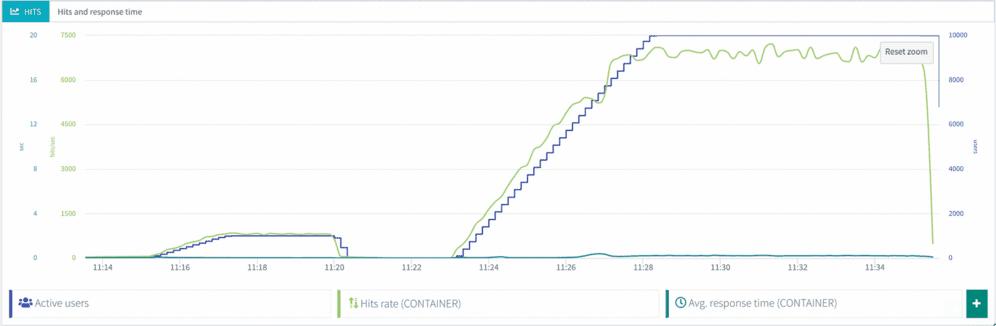

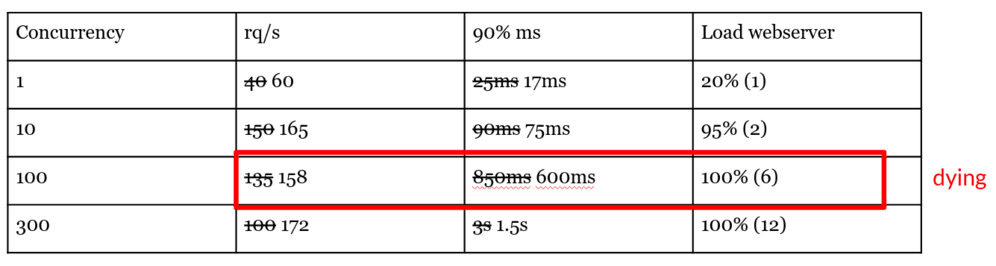

}Before on our load test:

After:

On production, we reduced our number of varnish servers by ten. The behavior is now explained in the next release varnish documentation along with a warning. The warning is triggered if there are more than 10 variants. And I hope everybody using user context hash has more than 10 logged in users, otherwise why bother?

Section intitulée cookies-sent-to-api-preventing-cacheCookies sent to API, preventing cache

The API is called via the backoffice coded in react. The domain is api.<something>. A cookie has been added at the root level from the www subdomain. The user logged on the BO had no cache because of that cookie.

I was in charge of the issue “the backoffice is slow”. But as I’ve aggressive rules in regards to accepting cookies, my browser was not sending a cookie and i got cache hits where the normal users hadn’t.

Now in the VCL of the API we strip all cookies.

sub vcl_recv {

unset req.http.Cookie;

}Section intitulée cheat-code-use-a-commercial-varnish-will-prevent-a-lot-of-issuesCheat code: use a commercial varnish will prevent a lot of issues

I showed you that you can get everything you need from the community version. But I also showed you that we had issues with it.

A commercial one exists and it provides very helpful tools, tips and access to a wide range of experts that would avoid the hassle.

Sharing this information today with you does not reduce the quality of Varnish (it’s awesome), but it’s a powerful tool that is better taken care of by experts. You should definitely consider their commercial package.

The commercial version will offer you:

- share cache between varnish, it reduces even more calls to the backend and so, is faster in every way;

- handle tags with ykey (not that important anymore now that the regexp is faster);

- better cookies management (cookieplus);

- better invalidation across cluster;

- VCL review by experts;

- and a lot more.

Fastly also provides a hosted modified version of Varnish which gives you access to a nicer programmable interface.

Searching for cool features in the VCL language will often bring you to the fastly documentation, you will be happy to read that it provides the features you need until you understand it’s not accessible to you. Same for the commercial version of Varnish itself by the way.

Fastly’s cache servers run an evolution of Varnish which diverged from the community project at version 2.1. Varnish Configuration Language (VCL) remains the primary way to configure our cache behaviors, and our VCL syntax derives from Varnish 2.1.5, but has been significantly upgraded and extended for Fastly-specific features. While it’s possible that VCL written for the open source Varnish will work on Fastly, our VCL variant should be seen as a separate language. See using VCL for detailed information about the architecture of VCL configurations.

Fastly powers a lot of sites, as we saw during their crash last week. They are awesome, even if their 503 page could be cleaner.

Section intitulée funny-storyFunny story

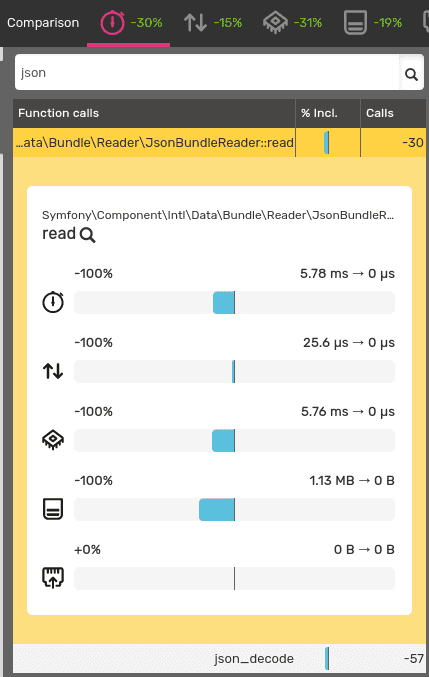

There was actually a bug in Symfony 5.2 when I ran my load tests. That impacted the performance of all the PHP code before the reverse proxy.

It’s now quite better actually, but still far away from using a true reverse proxy.

I see two points there:

- Varnish protects you of mistakes like that, and it’s great;

- It’s also awesome that new Symfony and PHP versions are faster than the one before.

Use the best of both worlds.

Section intitulée include-the-performance-in-your-ciInclude the performance in your CI

Performance is always a tradeoff. What is fine on your computer or in the development environment will not be the same as the real workload in the real prod environment.

You should monitor all the things you need to know and use your CI to include performance in your acceptance criteria before merging code.

Performance is a feature and a tool. By moving the load to Varnish, you can use your backend to compute more useful stuff.

Section intitulée takeaways-performance-is-addictiveTakeaways: Performance is addictive

There are a few takeaways. First you should always monitor what happens on your platform.

I showed you an incremental way of managing performance and scalability of your Website.

Varnish is free to install and free to use. I always put it in front of my Web servers as it causes me no harm by default. It allows me to scale and deliver a fast user experience very easily. Just add a few extra cache headers and you are done with it.

More complex scenarios will need a more complex approach, and I hope I gave you some insights on that. As always, Varnish itself should be monitored because it should cost you less than 1% CPU. Otherwise you have an issue, and you might need some help on that.

Varnish allows us to have an enormous traffic with stellar performance and a managed accuracy of data. I really encourage you to go a step further in the search for performance.

Having Varnish lets me have simple code. Having this super power in my stack and using it really well allows me to have simple and understandable code. We want to avoid layers and layers of cache in our PHP and domain layers.

Complicating the code will pass the code from 1s to 50ms with a lot of pain. It can be faster and almost free with a good reverse proxy and you can keep your code at 1s. It’s less error prone because it protects you.

The two most difficult things in computer science are naming things and invalidating cache. Put Varnish in place from the beginning, define the invalidation strategy and you will be okay.

Commentaires et discussions

Nos formations sur ce sujet

Notre expertise est aussi disponible sous forme de formations professionnelles !

Symfony avancée

Découvrez les fonctionnalités et concepts avancés de Symfony

Ces clients ont profité de notre expertise

Pour améliorer les performances et la pertinence des recherches sur le site e-commerce, JoliCode a réalisé un audit approfondi du moteur Elasticsearch existant. Nous avons optimisé les processus d’indexation, réduisant considérablement les temps nécessaires tout en minimisant les requêtes inutiles. Nous avons également ajusté les analyses pour mieux…

LOOK Cycle bénéficie désormais d’une nouvelle plateforme eCommerce disponible sur 70 pays et 5 langues. La base technique modulaire de Sylius permet de répondre aux exigences de LOOK Cycle en terme de catalogue, produits, tunnel d’achat, commandes, expéditions, gestion des clients et des expéditions.

Après avoir monté une nouvelle équipe de développement, nous avons procédé à la migration de toute l’infrastructure technique sur une nouvelle architecture fortement dynamique à base de Symfony2, RabbitMQ, Elasticsearch et Chef. Les gains en performance, en stabilité et en capacité de développement permettent à l’entreprise d’engager de nouveaux marchés…